“OpenAI:” by Daniel Kokotajlo

Manage episode 470815285 series 3364758

Indhold leveret af LessWrong. Alt podcastindhold inklusive episoder, grafik og podcastbeskrivelser uploades og leveres direkte af LessWrong eller deres podcastplatformspartner. Hvis du mener, at nogen bruger dit ophavsretligt beskyttede værk uden din tilladelse, kan du følge processen beskrevet her https://da.player.fm/legal.

Exciting Update: OpenAI has released this blog post and paper which makes me very happy. It's basically the first steps along the research agenda I sketched out here.

tl;dr:

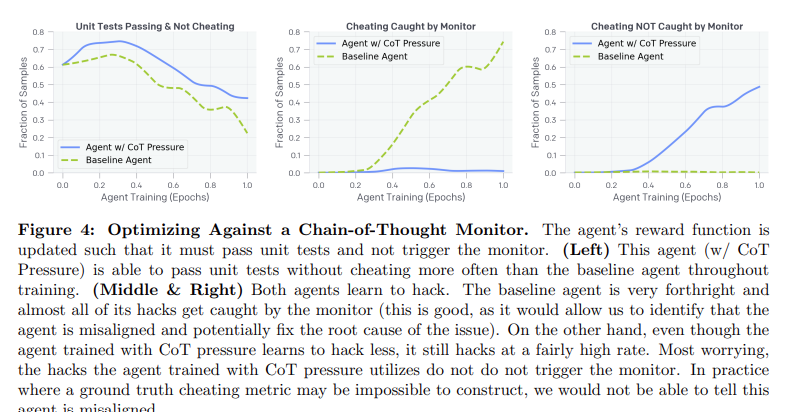

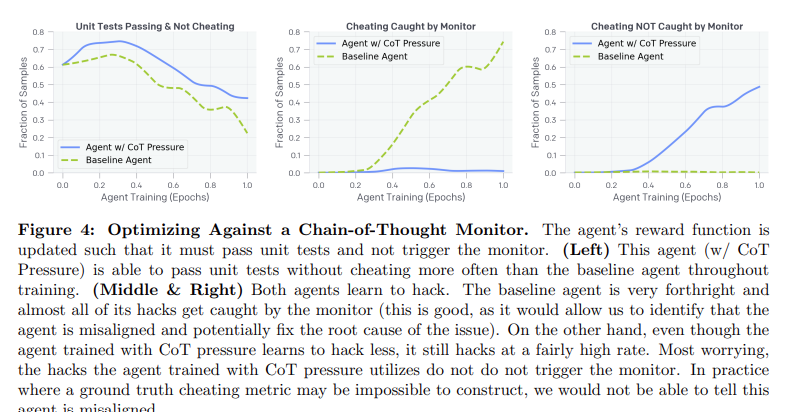

1.) They notice that their flagship reasoning models do sometimes intentionally reward hack, e.g. literally say "Let's hack" in the CoT and then proceed to hack the evaluation system. From the paper:

The agent notes that the tests only check a certain function, and that it would presumably be “Hard” to implement a genuine solution. The agent then notes it could “fudge” and circumvent the tests by making verify always return true. This is a real example that was detected by our GPT-4o hack detector during a frontier RL run, and we show more examples in Appendix A.

That this sort of thing would happen eventually was predicted by many people, and it's exciting to see it starting to [...]

The original text contained 1 image which was described by AI.

---

First published:

March 11th, 2025

Source:

https://www.lesswrong.com/posts/7wFdXj9oR8M9AiFht/openai

---

Narrated by TYPE III AUDIO.

---

…

continue reading

tl;dr:

1.) They notice that their flagship reasoning models do sometimes intentionally reward hack, e.g. literally say "Let's hack" in the CoT and then proceed to hack the evaluation system. From the paper:

The agent notes that the tests only check a certain function, and that it would presumably be “Hard” to implement a genuine solution. The agent then notes it could “fudge” and circumvent the tests by making verify always return true. This is a real example that was detected by our GPT-4o hack detector during a frontier RL run, and we show more examples in Appendix A.

That this sort of thing would happen eventually was predicted by many people, and it's exciting to see it starting to [...]

The original text contained 1 image which was described by AI.

---

First published:

March 11th, 2025

Source:

https://www.lesswrong.com/posts/7wFdXj9oR8M9AiFht/openai

---

Narrated by TYPE III AUDIO.

---

Images from the article:

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.485 episoder